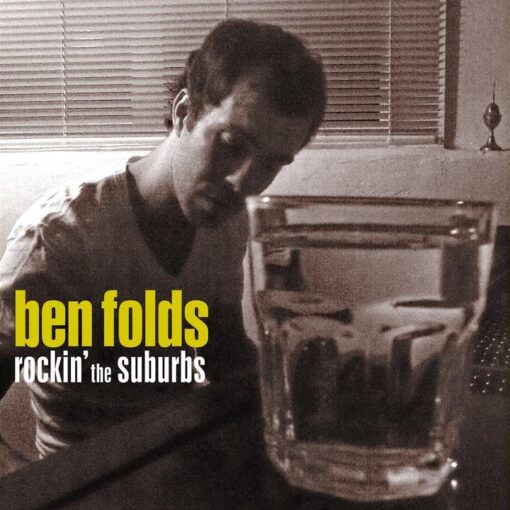

24 years ago, Ben Folds’ Rockin’ the Suburbs entered my bloodstream – and I still remember the bus ride to Montreal with the CD in my Discman. It wasn’t just the music though. Part of what mesmerized me is that the album was practically a one-man show. Ben Folds wrote, […]

Free Software

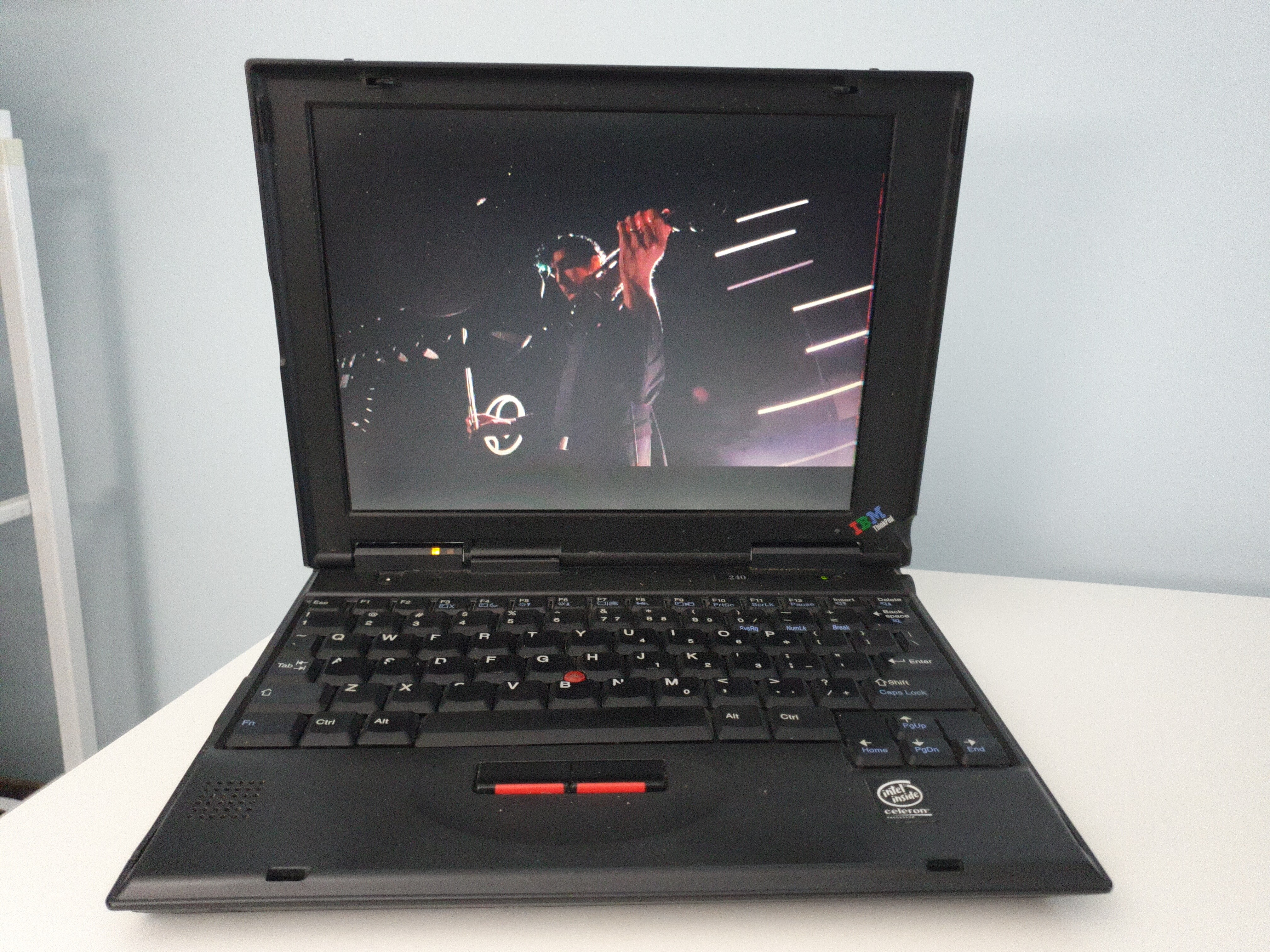

There are about 30 working desktops and laptops in my house, ranging from 1993 to present, but I think the coolest computer I have is my parents’ old IBM ThinkPad 240. One of the smallest ThinkPads ever made back in 1999, this is a vintage ThinkPad. It’s still operational today […]

Over the past few weeks, I’ve been expanding my two-screen setups into three-screen setups — a laptop plus two external monitors. Because I thought I only had one display port (HDMI), I went searching for USB display adapters. (I just realized today that my ThinkPad T470 has a USB-C / […]

Printers have always been terrible. I got away without buying a new printer since 2008 or 2010… until today. I bought an HP printer, because I’ve used HP printers for a long time, and mainly because of the existence of hplip. My goal, after all, is to have no nonsense […]

In Part 1 in this series, I explained why there are no easy answers to social media censorship. The problem is not a “Big Tech” conspiracy to silence particular voices. The problem is that too much of our public discourse is mediated by private platforms — centralized, proprietary, walled gardens. […]

I spent a few hours troubleshooting a problem with Thin when I upgraded from Redmine 2.5 to Redmine 3.0 on a Debian Wheezy server. I found a solution that’s worked for me. I’m not confident enough with Ruby and this setup to make a HowTo on the Redmine wiki, but […]

I got into a public fight with IceWeasel/Firefox 30 and the Mozilla sync service on pump.io last month, and was meaning to publish my “fix”… but it was so hacky, I don’t know which part of it actually worked. But, since it’s somewhat time-sensitive during this sync service transition, I […]

After some strange behaviour in gPodder 2.20.3 yesterday on my N900 (not responding to episode actions), I quit gPodder and tried to start it up again, but it would crash during startup everytime with an error about “database disk image malformed” from line 316 of dbsqlite.py on the query: “SELECT […]

This post is part of a series in which I am detailing my move away from centralized, proprietary network services. Previous posts in this series: email, feed reader, search. Finding a replacement for Google Calendar has been one of the most difficult steps so far in my degooglification process, but […]