There are no easy answers to speech, censorship and internet freedoms given the current state of the internet. Some solutions are better than others, but there’s no perfect answer. If someone suggests there’s a simple answer, they’re probably wrong. As Harvard internet law professor Jonathan Zittrain explained in June: The […]

Blog – Unity Behind Diversity

Refuting conspiracy theory arguments is exhausting. It’s like battling an army of orcs or white walkers. It’s not very hard to take down any given individual, but they just keep coming. It’s a pattern of overwhelming, and these arguments are almost always smokescreens for the true beliefs — and the […]

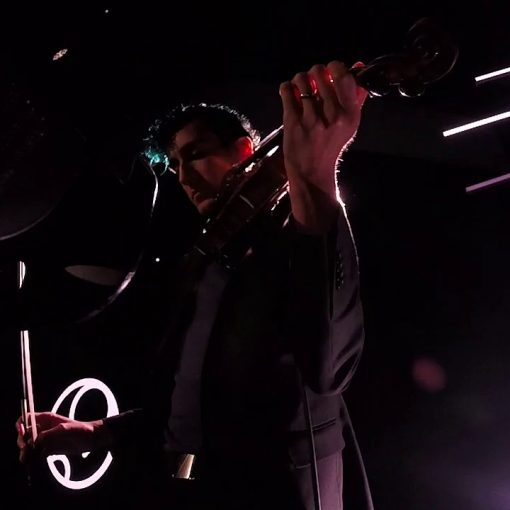

One of the personal silver linings of 2020 is that I’m making music again. In a way, I had never really stopped. But in a way, I had never really started. Origins: Subcreation from the Beginning My parents had started me in violin at age 4, but it wasn’t until […]

If I were to get a blood test today, there would still be some antibodies present from when The World Has Turned and Left Me Here entered my bloodstream sometime in Grade 9. While the entire blue album is constitutive, The World Has Turned has a special place secured in […]

When I first heard the song Light while working one day in the Ryerson SLC Amphitheatre, I instantly became a San Holo fan. I started working on a rock cover back in Spring 2019, but the project sat on the shelf for a while. After the launch of NMG Studios […]

When I get frustrated about people’s response to public health measures, I rant on social media. When I rant on social media, some of the conversations are painful, but… others are just fantastic. Perhaps I thrive on genuine dialogue, which forces you to try to understand another person, and to […]

Northrop Frye says that the lyric poem gets written because some normal activity has been blocked, the normal progression of time, and the poet has to write about that block before returning to the world of time. This Spring, while working on my home studio production, I came across Sam […]

This Spring during the lockdown, I took the opportunity to take some Recording Revolution courses, like Mixing University and Total Home Recording. I’m shifting my musical focus to my home studio for thie next phase of life, and hope to work through the backlog of original songs I’ve written but […]

Since the start of the pandemic, many people have questioned the lockdowns, the emergency measures and overall government response. Especially after the curve was flattened in Canada, even more people question whether the measures are still necessary, or whether the goalposts are shifting. This defies common sense, the critique goes. […]